Most of the traffic on the Internet is sent in the clear – fact. Back in the day the Internet was a trusting place. You’d think that given it was developed as a Darpa (Defense Advanced Research Projects Agency) project that there would be a lot of thought put into security. Well there was and the thought process went something like this, “If you have access to the Internet you must be a good guy or you wouldn’t have access to the Internet.” Not a lot of point wasting expensive processing cycles on encryption when you trust everyone on the network anyway.

Most of the traffic on the Internet is sent in the clear – fact. Back in the day the Internet was a trusting place. You’d think that given it was developed as a Darpa (Defense Advanced Research Projects Agency) project that there would be a lot of thought put into security. Well there was and the thought process went something like this, “If you have access to the Internet you must be a good guy or you wouldn’t have access to the Internet.” Not a lot of point wasting expensive processing cycles on encryption when you trust everyone on the network anyway.

So a whole fleet of protocols sprung up where everything is sent in the clear. Most didn’t use usernames or passwords but those that did happily send those in clear text over the wire. Protocols like http, ftp, smtp, ntp, dns, snmp, rip, etc etc all are in the clear. As time went on some of these had encryption strapped on but for the most part if you take a packet analyzer like wireshark and have a look at the traffic on your home network (which you have written permission to analyse/sniff) then you’ll see a lot of clear text going across the wire.

Of course this is only a problem if you have access to the routers that your data passes through. Normally it’s not an issue because it’s difficult to get physical access to this equipment in order to sniff the traffic. This all changes when we start talking about wireless networks as that data is now transmitted through the air and those signals can travel a long way. Anyone within range of your signal can see your data pass by in the clear. To combat this wireless networks have encryption like WEP/WPA that present a barrier to eavesdroppers.

The recent popularity of Internet enabled mobile devices has fueled an explosion of unencrypted WiFi public access points. Whether it’s installed by the IT team in a corporate office or a mom and pop coffee shop owner, all these network’s have one thing in common. They are the red light districts of Internet access. While the services on offer may be tempting, viruses, embarrassment, and theft await the unwary.

The recent popularity of Internet enabled mobile devices has fueled an explosion of unencrypted WiFi public access points. Whether it’s installed by the IT team in a corporate office or a mom and pop coffee shop owner, all these network’s have one thing in common. They are the red light districts of Internet access. While the services on offer may be tempting, viruses, embarrassment, and theft await the unwary.

You simply cannot trust these networks. Not just because that the guy next to you is running backtrack but also because the access point may be already compromised at installation or by someone running a man in the middle attack. Once the access point is compromised applications that run over https are also potentially vulnerable. Even if the network is clean, draconian laws in some jurisdictions require all WiFi providers to maintain a log of their customers behavior.

sudo netstat -anp | grep 3128

One easy to setup solution is to tunnel your traffic over ssh to your own squid proxy. I already mentioned this back in HPR episode 227 but I wanted to go into a bit more detail here.

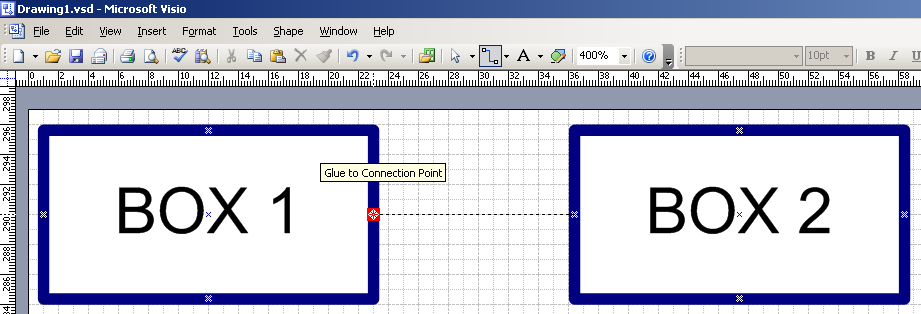

This solution takes advantage of the fact that most browsers support accessing the Internet via a proxy server. The idea of a proxy server is to allow controlled access out of an internal network to the Internet. So instead of trying to contact the Internet site directly, they will send the request to the proxy server so that it can relay the request to the Internet. It will also take care of passing back the traffic to the browser. Normally you connect to a proxy on a local area network but we don’t trust the local network any more. So we are going to setup the proxy server on a network that we do trust, and use that as the exit point. The traffic between the browser and the proxy server will be encrypted for us by a secure shell tunnel.

The proxy server will be setup to only allow connections from it’s loopback address – or to put it simply – only from processes running on the server itself. The web browser will send requests to it’s own loopback address on the laptop. The ssh client running on the same laptop will encrypt the packets and send them over the hostile network to the ssh server process listening on the Internet address on your home server. The sshd process will unencrypt these packets and forward them to the servers local address where the squid application is listening. The loopback address is in a special range of network addresses that allows applications running on the same server to communicate with each other over IP. In IPv4 it is actually a class A address range of 127.0.0.0/8 and sudo netstat -anp | grep 3128

is defined by rfc3330. Although there are 16,777,214 addresses reserved for that network, in most cases only one address 127.0.0.1 is setup. In IPv6 only one address is defined.

You can check the status of your loopback address by typing ifconfig lo

user@pc:~$ ifconfig lo

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:16436 Metric:1

RX packets:88 errors:0 dropped:0 overruns:0 frame:0

TX packets:88 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:0

RX bytes:5360 (5.3 KB) TX bytes:5360 (5.3 KB)

Setting up the proxy server.

If you’ve heard HPR episode 227 then you are already running squid but for those that didn’t make it through, here’s a quick summary:

sudo apt-get install squid

That’s it. You now have a the squid proxy server running and listening for traffic on port 3128. In the default configuration of Debian and Ubuntu needed no modification as it only allows access out to the Internet if the connections originate from the loopback address.

You can use netstat to find out if it actually is listening on port 3128.

user@server:~$ sudo netstat -anp | grep 3128

[sudo] password for user:

tcp 0 0 127.0.0.1:3128 0.0.0.0:* LISTEN 1948/(squid)

user@pc:~$ man netstat

...

DESCRIPTION

Netstat prints information about the Linux networking subsystem. ...

...

-a, --all

Show both listening and non-listening sockets. With the --interfaces

option, show interfaces that are not up

...

--numeric , -n

Show numerical addresses instead of trying to determine symbolic host,

port or user names.

...

-p, --program

Show the PID and name of the program to which each socket belongs.

...

This tells us that the squid process on pid 1948 is listening for TCP connections on the loopback address 172.0.0.1 and port number 3128. We can even connect to the port and see what happens. Open two sessions to your server. In one we will monitor the squid log file using the tail command and in the other we’ll connect to port 3128 using the telnet program.

On the first screen:

user@server:~$ sudo tail -f /var/log/squid/access.log

Then on the other screen:

user@server:~$ telnet localhost 3128 Trying 127.0.0.1... Connected to localhost. Escape character is '^]'.

Then type anything here and press enter. You will get the standard squid html 400 Bad Request response back.

HTTP/1.0 400 Bad Request

Server: squid/2.7.STABLE6

Date: Mon, 05 Jul 2010 19:18:56 GMT

Content-Type: text/html

Content-Length: 1210

X-Squid-Error: ERR_INVALID_REQ 0

X-Cache: MISS from localhost

X-Cache-Lookup: NONE from localhost:3128

Via: 1.0 localhost:3128 (squid/2.7.STABLE6)

Connection: close

< !DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN" "http://www.w3.org/TR/html4/loose.dtd">

ERROR: The requested URL could not be retrieved

ERROR

The requested URL could not be retrieved

While trying to process the request:

!!!!!!!!!! type anything here and press enter !!!!!!!!!!

The following error was encountered:

- Invalid Request

Some aspect of the HTTP Request is invalid. Possible problems:

- Missing or unknown request method

- Missing URL

- Missing HTTP Identifier (HTTP/1.0)

- Request is too large

- Content-Length missing for POST or PUT requests

- Illegal character in hostname; underscores are not allowed

Your cache administrator is webmaster.

Generated Mon, 05 Jul 2010 19:18:56 GMT by localhost (squid/2.7.STABLE6) Connection closed by foreign host. user@server:~$

On the other screen you will see the error

1278358230.552 0 127.0.0.1 TCP_DENIED/400 1503 NONE NONE:// - NONE/- text/html

Configure ssh server

If you need to install ssh you can do so with the following command

sudo apt-get install openssh-server

You will need to modify your firewall/modem to allow incoming ssh connections to your ssh server. Now we come across a minor problem. In an attempt to restrict access to web surfing, many of these hot-spots restrict outbound traffic to just http (port 80) and https (port 443) traffic. So your ssh client may not be able to connect to the ssh server because the default ssh port 22 may be blocked. The problem is easily fixed by telling the ssh client to use a port number that isn’t blocked by the hot-spot and that you’re not already using on your home router. You just need to pick a suitable port.

By the way you can look up common port numbers in the file /etc/protocols file.

Using port 80 is probably the best, unless you are running your own webserver at home. In my case Mr. Yeats is running a mirror of nl.lottalinuxlinks.org, so my next option is to use port 443 https. It’s unlikely that hot-spots block that port or the other customers will complain that they can’t login to facebook.

You can approach this in two ways.

- Setup a NAT port translation on the firewall. In that setup Internet clients connect to your external IP address on port 443 and your router reroutes that to port 22 of your internal server.

- Allow port 443 to pass your firewall and hit the server directly. In which case we need to have the sshd server itself listen on port 443

The procedure for setting up a port forwarding session on your home device will vary. This is the settings on my Speedtouch ADSL modem.

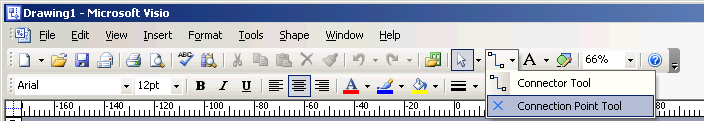

The other option is to setup your ssh server to also listen on port 443. Here again you can use netstat to find out if port 443 is available. Run the following command and if nothing is returned then the port is free.

user@pc:~$ sudo netstat -anp | grep 443

user@pc:~$ man netstat

...

DESCRIPTION

Netstat prints information about the Linux networking subsystem. ...

...

-a, --all

Show both listening and non-listening sockets. With the --interfaces option, show interfaces that are not up

...

--numeric , -n

Show numerical addresses instead of trying to determine symbolic host, port or user names.

...

-p, --program

Show the PID and name of the program to which each socket belongs.

...

Assuming that the port is available then you can edit the file /etc/ssh/ssh_config and add the line Port 443 under the existing Port 22 entry. After restarting the ssh daemon your home server should also be listening on port 22 as well as port 443.

user@pc:~$ sudo grep Port /etc/ssh/sshd_config Port 22 user@pc:~$ sudo netstat -anp | grep sshd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 917/sshd tcp6 0 0 :::22 :::* LISTEN 917/sshd user@pc:~$ sudo vi /etc/ssh/sshd_config user@pc:~$ sudo /etc/init.d/ssh restart * Restarting OpenBSD Secure Shell server sshd [ OK ] user@pc:~$ sudo grep Port /etc/ssh/sshd_config Port 22 Port 443 user@pc:~$ sudo netstat -anp | grep sshd tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 1790/sshd tcp 0 0 0.0.0.0:443 0.0.0.0:* LISTEN 1790/sshd tcp6 0 0 :::22 :::* LISTEN 1790/sshd tcp6 0 0 :::443 :::* LISTEN 1790/sshd user@pc:~$

Once the external port is setup to listen on 443 you should be able to connect to it from outside your network. You will only be able to do this from inside your network if you have set sshd to listen on port 443. If you implement port forwarding you won’t be able to connect from the internal network because your traffic will never be routed to the external port of your firewall/modem.

If you haven’t a permanent IP address you’ll need to setup a dynamic dns service so you will know which external address is configured on your router.

You can now check that you can connect from an external location to your server on port 443 by running the following command:

user@pc:~$ man ssh

...

-p port

Port to connect to on the remote host. This can be specified on a per-host basis in the configuration file.

...

user@pc:~$ ssh -p 443 user@example.com

Assuming you were able to login then you can disconnect so that we can connect again but with portforwarding enabled this time. As we know squid listens on port 3128 so now you need to open a ssh session that will tunnel the traffic sent to port 3128 on the localhost ip address on the client to localhost port 3128 on the server at the other end. You can do this by running the following command:

user@pc:~$ man ssh

...

-L [bind_address:]port:host:hostport

Specifies that the given port on the local (client) host is to be forwarded to the given host and port on the remote side.

...

user@pc:~$ ssh -L 3128:localhost:3128 -p 443 user@example.com

...

You should now have a ssh session open to your home server and everything will look very much as before. You can now check that ssh is now forwarding traffic from your client to the server. This means that the ssh client is listening on port 3128 on your laptop. You can check this using netstat.

user@pc:~$ sudo netstat -anp | grep 3128 [sudo] password for user: tcp 0 0 127.0.0.1:3128 0.0.0.0:* LISTEN 1872/ssh tcp6 0 0 ::1:3128 :::* LISTEN 1872/ssh

This shows that the ssh (client) process on pid 1872 is listening for TCP connections on the loopback address 172.0.0.1 and port number 3128.

Now all you have to do is set your browser to use a proxy of localhost 3128